As stated in the NSX Administration Guide, the NSX Load Balancer enables network traffic to follow multiple paths to a specific destination. This load distribution is transparent to end users. As with other load balancers, in a nutshell, you map an external, or public IP, address to a number of internal servers for load balancing. This is commonly seen when scaling out web services for example. The load balancer will accept the HTTP (for example) requests on it’s external IP address and then decides which internal server to send the traffic to.

There are two options available for implementing load balancing – inline or one-arm. One-Arm mode is also referred to as proxy mode. One of the edge gateway’s interfaces are used to advertise the virtual IP. It is fairly straight forward to set up, but the downside is that you need to have a load balancer per network segment as the edge router needs to be on the same segment as the internal servers. Also, it can make traffic analysis harder as the traffic is ‘proxied’. The alternative is to use the inline mode. With inline mode, NAT isn’t used and a single NSX edge can provide load balancing for multiple network segments. The drawback is that with an inline configuration you can’t use a logical distributed router because the load balanced servers have to use the edge router as their gateway directly.

NSX provides load balancing up to OSI layer 7. This post will look at configuring NSX load balancing – before we get started though you need to have deployed a NSX Edge Services Gateway.

Configuring NSX Load Balancing

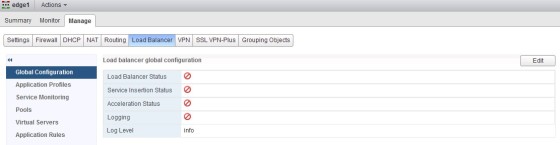

We start by enabling the load balancer service on the NSX Edge router. To do so, navigate to ‘Networking and Security’ in the web client, then to ‘NSX Edges’. Once there, double click the Edge router instance you wish to configure then select the ‘Load Balancer’ tab:

Click the ‘Edit’ button, then tick ‘Enable Load Balancer’:

The “Enable Service Insertion’ is if you want to link to a third party loadbalancer. Note that if you aren’t going to be using L7 features you can ‘Enable Acceleration’ which will disable Layer-7 features whilst making L4 load balancing faster. Finally, you can optionally choose to enable logging, and set an appropriate logging level. Click OK once satisfied with the configuration.

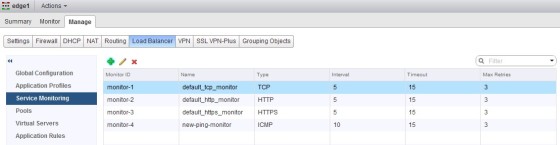

The next step is to create a service monitor. The service monitor determines how the load balanced servers will be monitored. The NSX load balancer needs to monitor the load balanced services so that it can decide whether to send it user traffic or not. There are a few different ways to check the end point is alive – you can have the load balancer send a http(s) request, a connection to a specific port and an ICMP ping test. To add a service monitor, click the ‘Service Monitoring’ menu item on the Load Balancer tab. Click the green ‘plus’ symbol to create a new service monitor:

I’ve decided to use a simple ICMP ping monitor. Once created you should see the new service monitor listed, along with the pre-defined default service monitors:

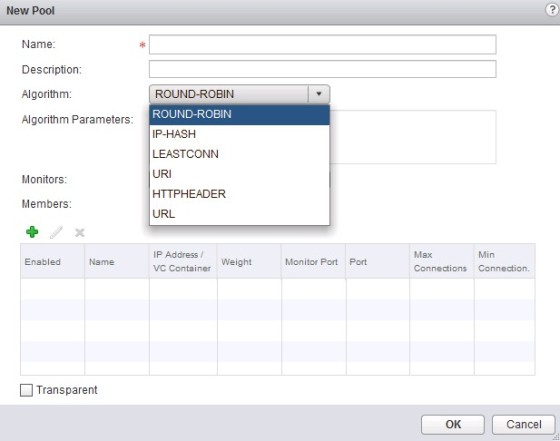

The next step is to configure a server pool. Server pools are where the load balanced servers are defined. When creating a pool, you will be presented with a choice of which load balancing algorithm to use:

ROUND-ROBIN will allocate traffic in turn based on weights assigned to each server in the pool. For example, if all the servers in the pool are weighted equally then they would each get an equal share of requests sent their way.

IP HASH – The server to which traffic is sent will be determined by a hash of the source and the destination IP address.

LEAST-CONN – This one is straight forward. When a new request hits the load balancer it is sent through to the server in the pool with the least amount of active connections.

URI – This takes a hash of the URI seen and assigns it to a server.

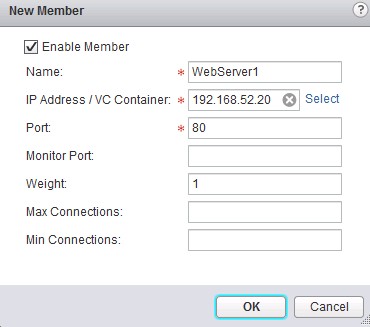

After naming the pool and choosing the load balancing algorithm to use, and the monitor to use, add the pool members by clicking the green ‘plus’ symbol:

This is where you can set the connection information for the pool members and any weighting, along with minimum and maximum connections. After adding your pool members, the last step here is to select the mode. Ticking the ‘Transparent’ box will set the load balancer mode as inline, whereas leaving it un-checked will result in it using the one-arm mode.

After creating the server pool, the next step is to create an application profile. The application profile contains rules used to determine how the NSX Load Balancer deals with an application, for example, you can configure SSL offloading. To create an application profile, click on the ‘Application Profiles’ menu item, then click on the green ‘plus’ symbol:

Finally, we need to create a Virtual Server. This is what links together everything we have configured up until now. To start, click the ‘Virtual Servers’ menu item, then click the green ‘plus’ to add a new virtual server:

In the ‘New Virtual Server’ window, select the Application Profile to be used, and set a name to identify the virtual server. Next, set the IP Address to be used (this is the external interface on the NSX Edge router), then select the protocol to be load balanced, the port and the server pool that the traffic will be sent to. Optionally, configure the connection limit settings. And that’s it! You should now be load balancing connections to the internal server pool members.

Useful Links and Resources

https://www.vmware.com/files/pdf/products/nsx/vmw-nsx-network-virtualization-design-guide.pdf

https://pubs.vmware.com/NSX-6/topic/com.vmware.ICbase/PDF/nsx_6_admin.pdf