This post will have a look at the topic of tuning ESXi host CPU configuration, in relation to the VCAP-DCA objective of the same name. In the vSphere 5 Performance Best Practices guide, a number of recommendations are made:

- ESXi allows significant CPU over commitment. Normally consolidation ratios between 3:1 and 5:1 are seen.

- Monitor CPU performance using esxtop/resxtop. If the CPU load average on the first line of the CPU panel is greater than 1 then it indicates that the system is overloaded:

12:41:08am up 4:31, 278 worlds, 0 VMs, 0 vCPUs; CPU load average: 0.01, 0.01, 0.00

PCPU USED(%): 1.2 0.3 0.7 1.2 AVG: 0.9

PCPU UTIL(%): 1.7 0.5 0.7 1.4 AVG: 1.1

- Configuring virtual machines with more CPU resources than they need can negatively impact performance. An example of this would be a single-threaded workload running in a multiple-vCPU VM.

Single-Core (UP) and Multicore (SMP) HALs

Uniprocessor (single core) and Symmetric Multiprocessor (Multi-core) are the two types of hardware abstraction layer/kernel used by a VM. Some never operation systems use a single HAL for either single 0r multicore configurations, however other OS’s, such as Windows 2000, should have the correct HAL configured. For example, a virtual machine with a single vCPU should have a uniprocessor HAL.

CPU Scheduler

The CPU scheduler is responsible for scheduling the vCPUs on physical CPUs. It does this whilst taking into account any shares and reservations that apply, is NUMA aware and provides co-scheduling for SMP virtual machines. The CPU scheduler periodically checks physical CPU utilization (every 2 to 40 ms). If a particular core is saturated, “worlds” will be moved to a different core. When the host’s CPU resources are under contention the host uses the proportional-share algorithm to allocate resources appropriately. More on the CPU Scheduler here.

NUMA

There is a useful explanation of Non-Uniform Memory Access here. The CPU scheduler takes NUMA into account and will assign a VM to a NUMA node (cpu/memory) and will then attempt to assign the VM its CPU and memory resources from that NUMA node. For this to work properly a single VM shouldn’t be allocated more vCPUs than there are cores on a particular socket, and shouldn’t be allocated more memory than each NUMA node provides.

Hyper-Threading

Hyper-threading allows two independent threads to run simultaneously. It makes a single processor core seem like two logical processors and, though it doesn’t double performance like having twice the cores would, it does give performance benefits. If your hardware supports hyper-threading then ESXi will automatically make use of it – though you may have to enable it in the host’s BIOS first.

There are some virtual machine configuration parameters in relation to hyper-threading to be aware of. On the virtual machines’s resources tab, under Advanced CPU, you can change the Hyperthreaded Core Sharing setting:

There are three modes: Any, None and Internal. Any is the default choice. When None is set, it will ensure that when a vCPU from the VM is assigned a logical processor, then no other vCPU will also be assigned to the other logical processor on the same core. This is essentially disabling hyper-threading for the virtual machine. Internal is similar to none in that no other virtual machine’s vCPU can share the logical processor, however another vCPU on the same virtual machine can share it.

Hyper-threading can be enabled/disabled on a host by navigation to the host’s configuration tab. Under processors, click properties:

Note that if you change this option, a reboot of the host will be required.

CPU Affinity

CPU affinity allows you to choose which physical processor(s) you want a virtual machine to run on. The option is only available for virtual machines that are not in a DRS cluster, or for those that have DRS set to manual.

The setting can be found in a virtual machine’s settings, on the resource tab, under Advanced CPU:

There are a number of issues to be aware of when using CPU affinity. For example, it can interfere with the host’s ability to meet reservations and shares specified for the virtual machine. Other issues are listed here.

Power Management

ESXi 5 provides five power management policies:

- Not Supported – The physical server does not support power management or it is not enabled in the BIOS.

- High Performance – The VMkernel will use power management features if requested by the BIOS for power capping or thermal events.

- Balanced (Default) – The VMkernel uses the available power management features conservatively to reduce host energy consumption without substantial performance degradation.

- Low Power – The VMkernel aggressively uses available power management features to reduce host energy consumption.

- Custom – Power management policy determined by advanced configuration parameters.

To set the power management policy for a host, navigate to the host’s configuration tab. Select Hardware | Power Management | Properties:

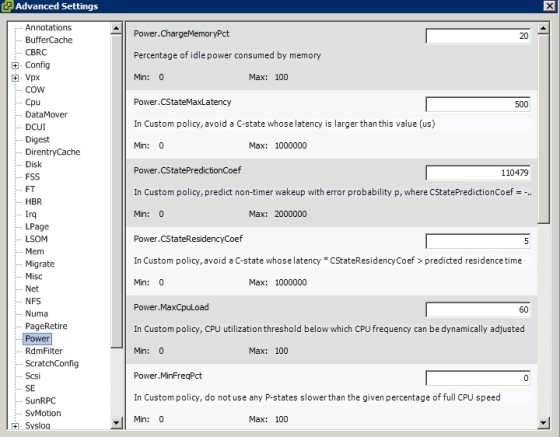

To configure custom power management policies on a host you need to edit the advanced power settings. Navigate to the host’s configuration tab. Select Software | Advanced Settings, then select Power:

Useful Links and Resources

https://www.vmware.com/pdf/Perf_Best_Practices_vSphere5.0.pdf