Where vSphere HA will restart a virtual machine in the event of a host failure, vSphere Fault Tolerance goes a step further in that it provides continuous availability for virtual machines by spinning up an identical virtual machine, which is kept in sync with the original, and will take over should the primary VM suffer an outage due to host failure. It is explained well in this document from VMware.

Cluster, Host and Virtual Machine Requirements for FT

There are a number of things to be aware of when configuring your vSphere environment for Fault Tolerance, as FT has a number of requirements in order for it to work. At the cluster level, all hosts should be capable of running the same version of FT (or same build number), and should have access to the same datastores and networks (as with HA – In fact, FT requires HA to be enabled on the cluster). You can get the FT version the host is using from the summary tab.

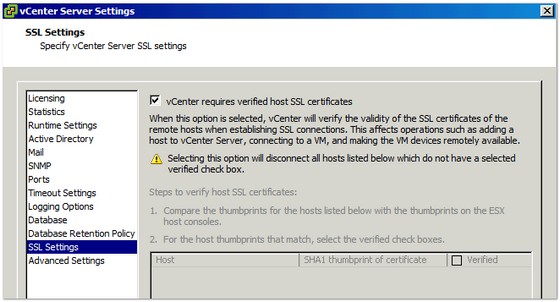

In addition to the above, host certificate checking must be enabled (though already likely is, as this is the default). You can check by going to the ‘vCenter Server Settings’ on the Administration menu, then selecting ‘SSL Settings’:

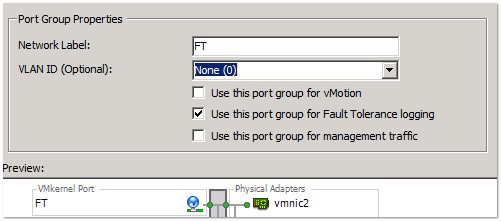

vMotion needs to be enabled and configured correctly, and a FT logging network needs to be configured:

In terms of host requirements, the hosts should have FT compatible processors, and the host must be licensed appropriately to use FT (this requires and Enterprise or Enterprise Plus License). Check the VMware Compatibility Guide to ensure that the hardware is supported for use with FT. Finally, Hardware Virtualisation must be enabled in the host’s BIOS.

There are quite a few requirements for FT, so far as virtual machines are concerned:

- A FT VM can use only virtual hardware that is supported for use with FT. This means no SMP, Physical RDM, Thin disks, NPIV and CD-ROMs and Floppy drives amongst others

- The VMs disks should be either physical RDM or Thick Provisioned VMDK.

- As with HA, the VMs files have to be on shared storage

- Only a single vCPU can be used on a FT VM. Maximum memory allocation is 64 GB.

- The virtual machine must be running a supported guest OS.

- The virtual machine cannot have any snapshots in place.

Configuration Steps to Enable vSphere Fault Tolerance Functionality

Once you have verified that your hosts are compatible with fault tolerance from a hardware perspective, you can begin to configure them for FT.

The first step (as mentioned above) is to ensure that host certificate checking is enabled. Next is the network configuration. As mentioned earlier, vMotion must be configured in order for FT to be enabled. FT also needs dedicated networking for FT logging. This is a virtual interface (like with vMotion) which must be on a separate VLAN/subnet to the vMotion interface. Also note that IPv6 is not supported on the FT logging interface.

Once the networking is configured, you can check that your host is compliant for using FT. First check the host’s summary tab – Both ‘vMotion Enabled’ and ‘Host Configured for FT’ should display ‘Yes’. If it doesn’t check the speech bubble next to the setting, to see what issues there are:

You can also check your hosts compliance for FT from the cluster’s ‘Profile Compliance’ tab. Here you check the cluster’s hosts for various cluster functionality, including fault tolerance:

VMware Best Practices when Implementing vSphere Fault Tolerance

VMware have published a number of best practices to bear in mind when building your environment to support the use of FT. These include:

- The hosts running primary and secondary FT VMs should have the same CPU speed. Indeed, FT works best when all the hosts in the cluster are of the same specification

- If bandwidth is an issue on the FT logging network, use 10GB NICs and Jumbo Frames. Sub-millisecond latency is recommended for the FT logging network

- Avoid Network Partitions

- A maximum of four FT VMs (primary or secondary) on any single host

- A maximum of 16 virtual disks per FT virtual machine

- Ensure hosts time is synchronised with a time server

There are more best practice recommendations in this document from VMware.